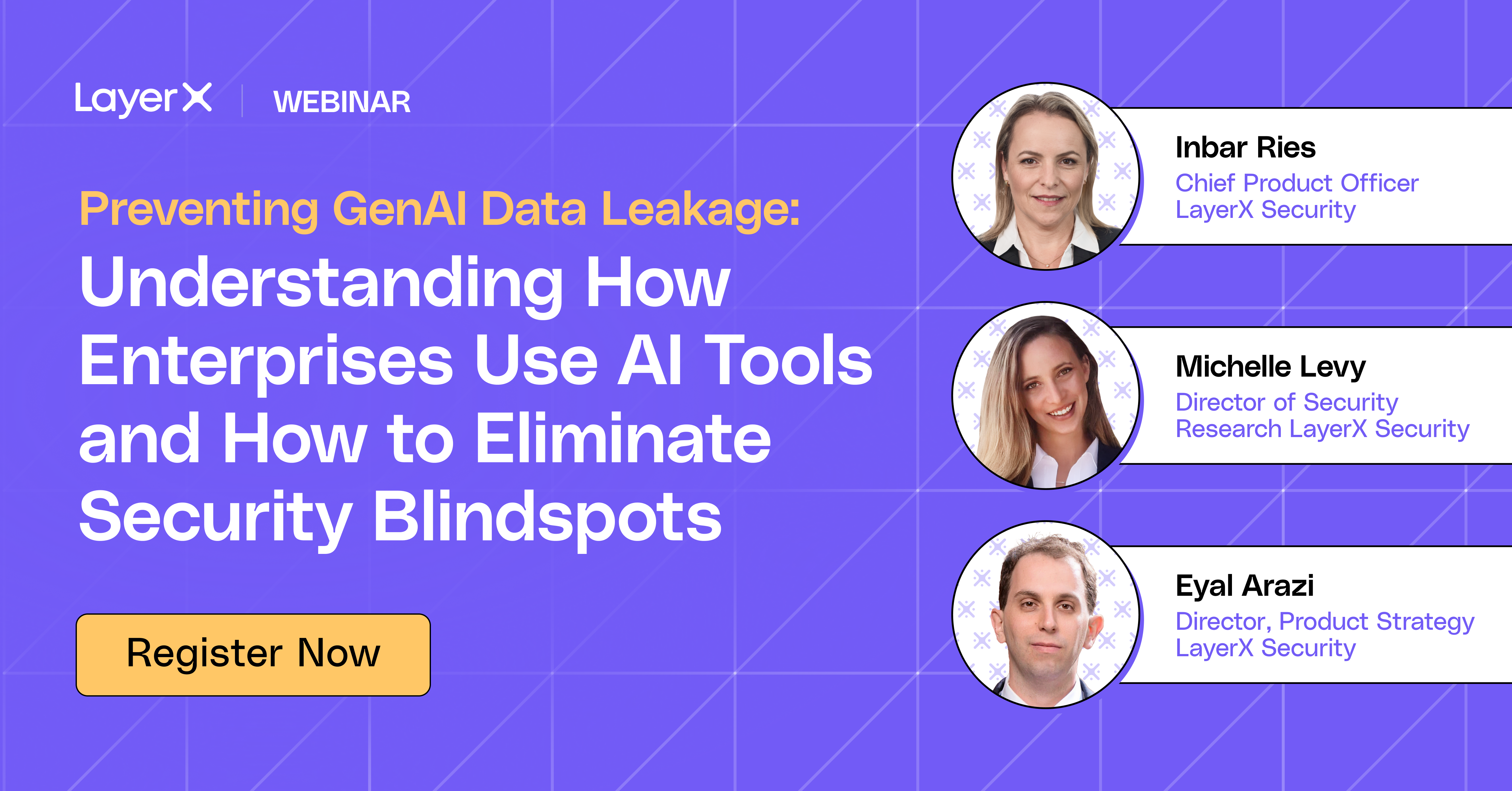

Join LayerX’s security leaders for a practical deep dive into how enterprises are using GenAI tools, and how to eliminate the hidden risks before they lead to data exposure.

GenAI is rewriting the rules of workplace productivity, and quietly introducing one of the biggest data leakage risks enterprises have ever faced.

In this exclusive session, LayerX product and research leaders unpack how employees are really using GenAI tools across the enterprise, what kinds of sensitive data are being exposed, and why most existing security tools can’t see, or stop, it. From “shadow AI” accounts to the accidental training of public LLMs on corporate data, you’ll walk away with a clear understanding of the risks, and the controls you need to stay ahead.

This is your playbook for securing GenAI usage without blocking innovation, and without losing visibility.

What you’ll learn:

📈 Real-world data on how, where, and why employees are pasting sensitive content into GenAI tools

🕵️♀️ The biggest blind spots of traditional security tools when it comes to AI usage

🔐 What types of sensitive data are most at risk—and why GenAI changes the DLP game

⚙️ The policy and control framework that enables safe, monitored GenAI usage

📚 Lessons learned from security teams already tackling these challenges in the field

Chief Product Officer, LayerX Security

Director of Security Research, LayerX Security